Welcome to this week’s roundup of handwritten AI news.

This week OpenAI said its AI voice cloner was too good to release.

An NYC-approved chatbot gave residents dodgy legal advice.

And researchers built an AI that knows what will happen in the future.

Let’s dig in.

AI and the feds

AI is set for deeper integration in US government operations. The White House announced new AI rules for federal agencies to ensure they use the tech in a way that is safe and doesn’t compromise the rights of the people they serve.

The US government will also launch a big hiring drive to employ 100 “AI professionals” to deploy to federal agencies. Good luck finding AI talent that hasn’t already been snapped up by Meta et al.

In a global push for AI safety, US and UK ministers met to establish a bilateral agreement on AI safety. The almost complete absence of UK AI regulation may see the US take the lead in forming critical policy.

The agreement says the countries will cooperate and share research in some interesting areas.

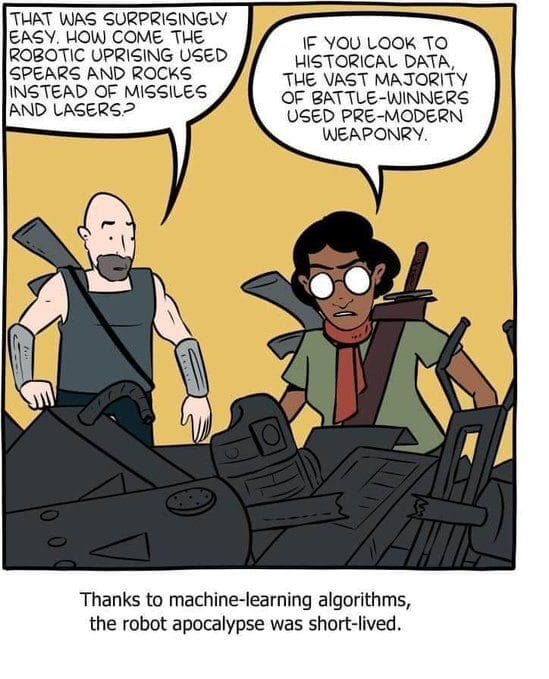

If they don’t manage to agree on how to keep us safe from AI, let’s hope a glitch in the training data saves us.

Source: Pinterest

I’m pretty sure it’s legal

If you’re looking for a good reason why the deployment of AI in government agencies needs regulation then look no further than New York City. The city launched an AI chatbot to help residents and businesses navigate government regulations and it’s advising people to break the law.

The NYC Office of Technology and Innovation says people should chill because most of what the chatbot says is true and besides, it’s a “work in progress.”

Google’s DeepMind may be able to help with issues like this. It developed SAFE, an AI agent that can fact-check LLMs. For now, SAFE just gives us an interesting way to benchmark which AI model delivers the most factual long-form answers.

Could it eventually help cure AI’s tendency to make stuff up?

DeepMind also developed Genie, a foundational model for building 2D game environments from images or text prompts.

The researchers assure us their text-to-game model isn’t just a way to get kids to make and play more computer games. It has interesting applications for robotics too.

Where do I sign up for it? I intend to use Genie purely for robotics research. And maybe to recreate my version of Mario Brothers.

Prime AI

The AI model leaderboard is hotly contested, but there’s no doubt which model Amazon thinks will win. The company just invested another $2.75 billion into Anthropic, the makers of the Claude models.

Apple is yet to release its own LLM, but there has been a flurry of AI developments at the company after what seemed to be a slow start. Apple researchers developed ReALM, a model that ‘sees’ on-screen visuals better than GPT-4.

Could Siri get an AI upgrade soon?

Who said that?

AI voice clones are already good and keep getting better. OpenAI gave us a few samples of how good Voice Engine, its voice cloning text-to-speech model is.

The company says initial testing shows there are some great use cases for the tech. However, because Voice Engine is so good the company says it might be too risky to release.

Speaking of risky behavior, is a relationship with an AI companion more or less risky than the real thing?

AI relationships seem to be on the rise. Want to know which countries are most interested in AI girlfriends? Here’s a full rundown of global AI girlfriend trends. You may be surprised who likes the idea and who doesn’t.

Humans are weird, so it’s unsurprising that training an AI to act how we want it to is a challenge. A new study attempted to align AI with crowdsourced human values. Is that a good thing?

Imagine if crowdsourced values were used to train an AI in Germany in 1939, or at the height of the slave trade.

Our faith in humanity was tested when we forgot to check the calendar before getting excited over this X post from Hugging Face engineer Phillip Schmid.

Sam should have pranked him by just releasing it.

Future power

Power usage in the AI industry continues to draw attention for all the wrong reasons. At NVIDIA’s GTC event, Delta Electronics unveiled new energy-efficient AI hardware that could help with that.

It may not grab headlines like the Blackwell GPUs, but the tech is impressive and so is the amount of power it will save.

What does the future of AI hold? Who will win the US election? Will humans go to Mars before 2030?

We might get the best answer by asking GPT-4. Berkeley researchers built an AI forecasting system that is more accurate than humans. It does have a strange quirk though.

In other news…

Here are some other clickworthy AI stories we enjoyed this week:

Microsoft and OpenAI are discussing a partnership to build the $100B Stargate supercomputer.

Google wants to run Gemini on its Pixel 8 phones but says it will require at least 8GB of RAM.

Elon Musk estimates there’s a 20% chance AI will destroy humanity, but that shouldn’t stop AI development. His was one of the more optimistic estimates.

NYC to test AI gun detection on the subway. Previous tests of the tech didn’t go very well.

OpenAI says you can use ChatGPT without signing up now. But there’s a catch.

Opera allows users to download and use LLMs locally.

Did Emad Mostaque leave Stability AI over unpaid bills?

And that’s a wrap.

Let’s hope AI gets rolled out faster in US federal agencies. If you’ve ever had to deal with the DMV or the Labor Department then you know it could only get better. Right?

Groundbreaking AI developments are being made daily, but the tech I really want to play with is DeepMind’s Genie. I want to use AI to make 2D platform games like we had back in the 80’s. Is that a little weird? Who’s with me on this?

AI girlfriends; a good idea for lonely people or just weird? I’m thinking a digital twin of your significant other could allow you to run simulations that could avoid a lot of arguments before they start.

Let us know what you think, and send us links to any juicy AI stories we may have missed.