As AI-powered image generators have become more accessible, so have websites that digitally remove the clothes of people in photos. One of these sites has an unsettling feature that provides a glimpse of how these apps are used: two feeds of what appear to be photos uploaded by users who want to “nudify” the subjects.

The feeds of images are a shocking display of intended victims. WIRED saw some images of girls who were clearly children. Other photos showed adults and had captions indicating that they were female friends or female strangers. The site’s homepage does not display any fake nude images that may have been produced to visitors who aren’t logged in.

People who want to create and save deepfake nude images are asked to log in to the site using a cryptocurrency wallet. Pricing isn’t currently listed, but in a 2022 video posted by an affiliated YouTube page, the website let users buy credits to create deepfake nude images, starting at 5 credits for $5. WIRED learned about the site from a post on a subreddit about NFT marketplace OpenSea, which linked to the YouTube page. After WIRED contacted YouTube, the platform said it terminated the channel; Reddit told WIRED that the user had been banned.

WIRED is not identifying the website, which is still online, to protect the women and girls who remain on its feeds. The site’s IP address, which went live in February 2022, belongs to internet security and infrastructure provider Cloudflare. When asked about its involvement, company spokesperson Jackie Dutton noted the difference between providing a site’s IP address, as Cloudflare does, and hosting its contents, which it does not.

WIRED notified the National Center for Missing & Exploited Children, which helps report cases of child exploitation to law enforcement, about the site’s existence.

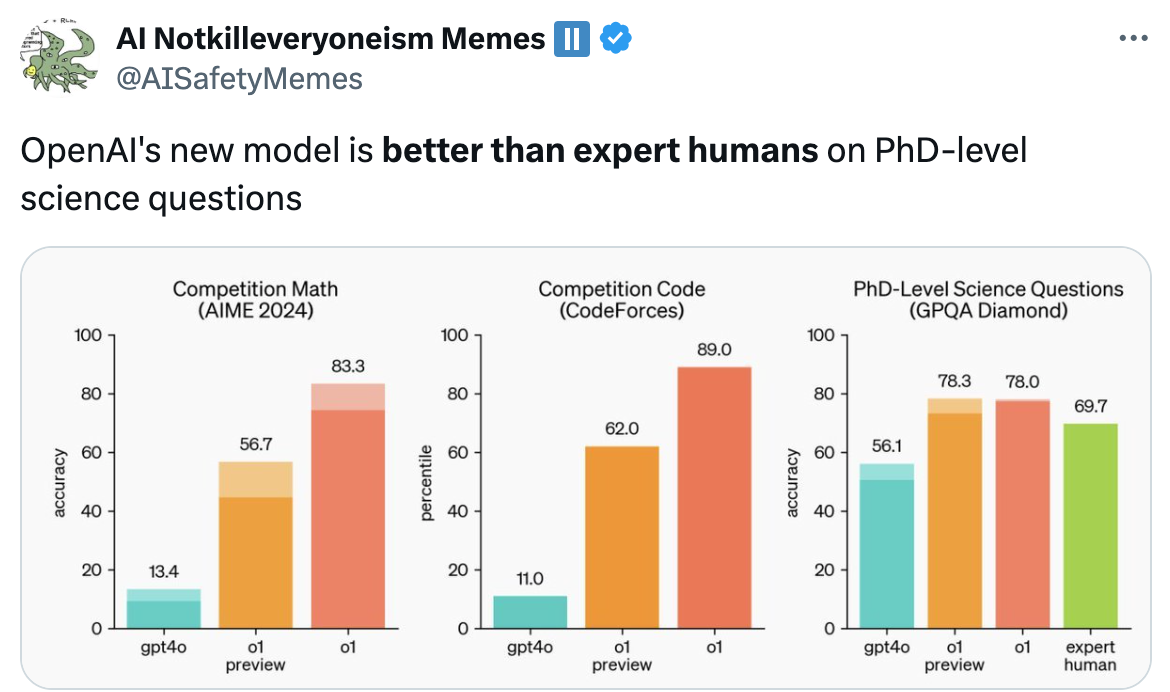

AI developers like OpenAI and Stability AI say their image generators are for commercial and artistic uses and have guardrails to prevent harmful content. But open source AI image-making technology is now relatively powerful and creating pornography is one of the most popular use cases. As image generation has become more readily available, the problem of nonconsensual nude deepfake images, most often targeting women, has grown more widespread and severe. Earlier this month, WIRED reported that two Florida teenagers were arrested for allegedly creating and sharing AI-generated nude images of their middle school classmates without consent, in what appears to be the first case of its kind.

Mary Anne Franks, a professor at the George Washington University School of Law who has studied the problem of nonconsensual explicit imagery, says that the deepnude website highlights a grim reality: There are far more incidents involving AI-generated nude images of women without consent and minors than the public currently knows about. The few public cases were only exposed because the images were shared within a community, and someone heard about it and raised the alarm.

“There’s gonna be all kinds of sites like this that are impossible to chase down, and most victims have no idea that this has happened to them until someone happens to flag it for them,” Franks says.

Nonconsensual Images

The website reviewed by WIRED has feeds with apparently user-submitted photos on two separate pages. One is labeled “Home” and the other “Explore.” Several of the photos clearly showed girls under the age of 18.

One image showed a young girl with a flower in her hair standing against a tree. Another a girl in what appears to be a middle or high school classroom. The photo, seemingly taken discreetly by a classmate, is captioned “PORN.”