At the same time, it will improve our cybersecurity, because we can also have improvements in security that are amplified a million-fold. So one of the big questions is whether there will be some sort of cyber offense or cyber defense natural advantage as this stuff scales. What does that look like over the long term? I don’t know the answer to that question.

Do you think it’s at all possible that we will enter any kind of AI winter or a slow-down at any point? Or is this just hockey-stick growth, as the tech people like to say?

It’s hard to imagine it really significantly slowing down right now. Instead it seems there’s a positive feedback loop where the more investment you put in, the more investment you’re able to put in because you’ve scaled up.

So I don’t think we’ll see an AI winter, but I don’t know. Rand has had some fanciful forecasting experiments in the past. There was a project that we did in the 1950s to forecast what the year 2000 would be like, and there were lots of predictions of flying cars and jet packs, whereas we didn’t get the personal computer right at all. So forecasting out too far ends up being probably no better than a coin flip.

How concerned are you about AI being used in military attacks, such as used in drones?

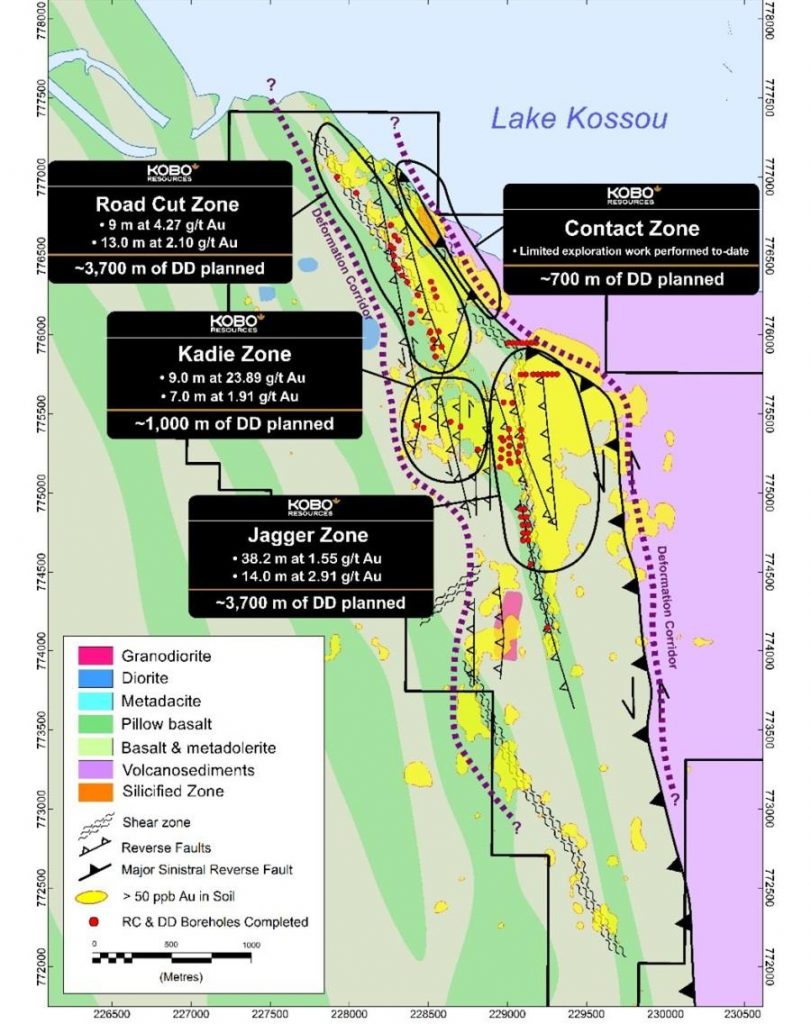

There are a lot of reasons why countries are going to want to make autonomous weapons. One of the reasons we’re seeing is in Ukraine, which is this kind of petri dish of autonomous weapons. The radio jamming that’s used makes it very tempting to want to have autonomous weapons that no longer need to phone home.

But I think cyber [warfare] is the realm where autonomy has the highest benefit-cost ratio, both because of its speed and because of its penetration depth in places that can’t communicate.

But how are you thinking about the moral and ethical implications of autonomous drones that have high error rates?

I think the empirical work that’s been done on error rates has been mixed. [Some analyses] found that autonomous weapons were probably having lower miss rates and probably resulting in fewer civilian casualties, in part because [human] combatants sometimes make bad decisions under stress and under the risk of harm. In some cases, there could be fewer civilian deaths as a result of using autonomous weapons.

But this is an area where it is so hard to know what the future of autonomous weapons is going to look like. Many countries have banned them entirely. Other countries are sort of saying, “Well, let’s wait and see what they look like and what their accuracy and precision are before making decisions.”

I think that one of the other questions is whether autonomous weapons are more advantageous to countries that have a strong rule of law over those that don’t. One reason to be very skeptical of autonomous weapons would be because they’re very cheap. If you have very weak human capital, but you have lots of money to burn, and you have a supply chain that you can access, then that characterizes wealthier autocracies more than it does democracies that have a strong investment in human capital. It’s possible that autonomous weapons will be advantageous to autocracies more than democracies.