Leah Feiger: Absolutely.

Vittoria Elliott: Literally, this man spoke to Reuters and was like, “Yeah, we built it on top of ChatGPT.” Would OpenAI have ever caught that usage of it?

Leah Feiger: Right.

Vittoria Elliott: We don’t know.

Leah Feiger: Yeah, like you said, it’s very hard to ID these things.

Vittoria Elliott: Yeah. The techniques we have for them are really nascent, and they’re voluntary most of the time.

Leah Feiger: Yeah, they rely on self-admission and good actors. People that are willing to say, “Yeah, I used this.” Or, “Is this OK for me to use in this way?” Interesting. Well, that’s a lot of different examples there, and some of them actually sound pretty scary, but others sound less scary. Translating a speech actually doesn’t sound like the worst thing to me. That just is talking about informing voters.

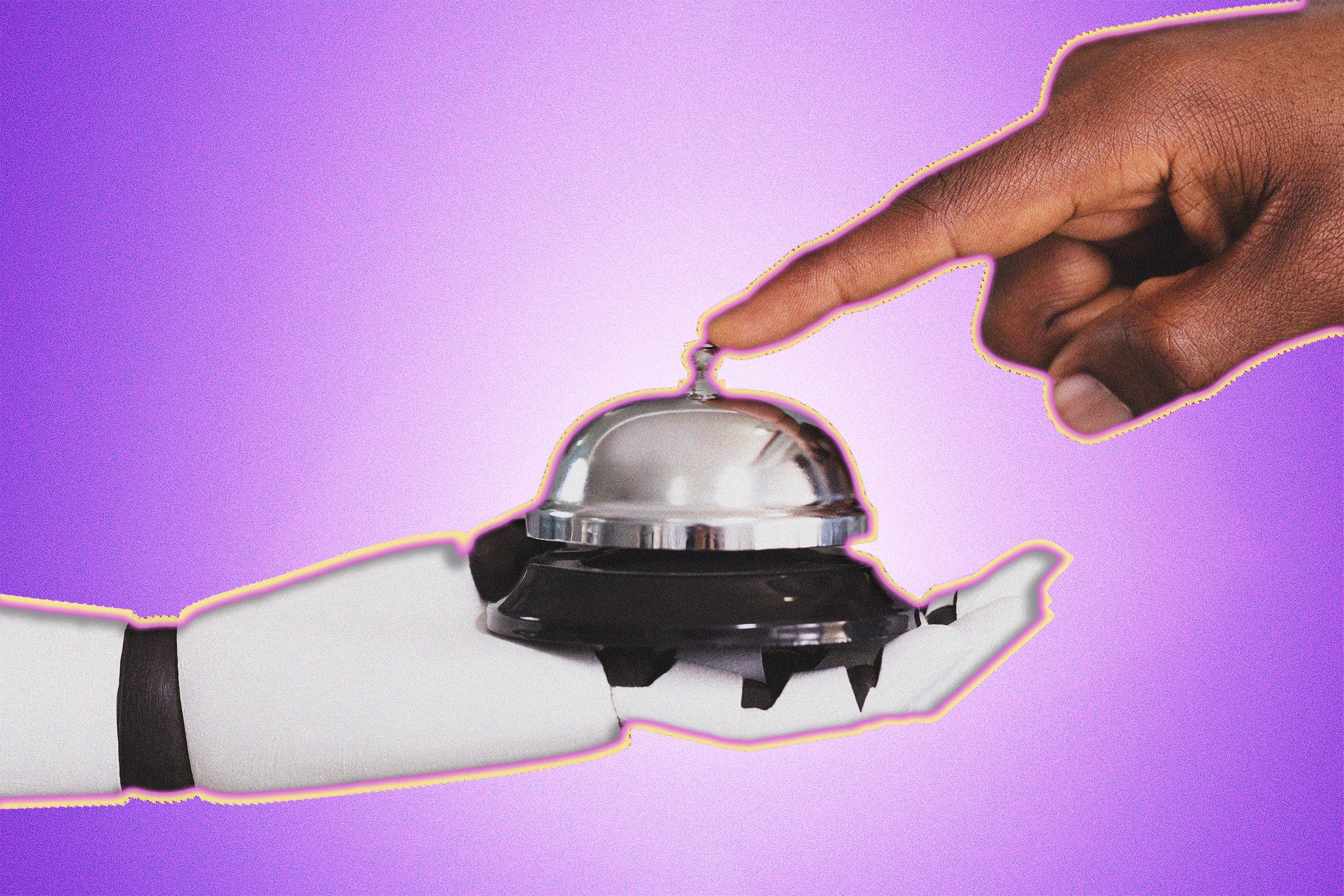

Vittoria Elliott: Totally. I think a lot of the conversation around generative AI has been along the lines of mis- and disinformation. People are really worried about how technology can be used to deceive.

Leah Feiger: Absolutely. It can be. As we talked about at the top of this, maybe there are some voters in South Africa that were just so pumped to hear that Eminem was supporting their candidate.

Vittoria Elliott: It’s also powerful in other ways, like it can be used for satire. Which is actually, as we’ll talk about in the second segment, how it kicked off in India was a satire thing. It can help rally people. But the problem is is that even sometimes if people know something’s fake, it can still be really emotional. If you’re seeing an image or hearing someone say something that feels important to you, even if you are like, “I know this isn’t real …”

Leah Feiger: Sure.

Vittoria Elliott: There’s something that’s very emotive about that.

Leah Feiger: Like what? Give me an example there.

Vittoria Elliott: In a story we just released this week, we looked at the AFD, the far-right party in Germany that actually a German court has recently designated as potentially anti-democratic and extremist. They ran an ad on Meta, on Facebook and Instagram, that showed an image of a white woman with injuries to her face that appeared, based on the researcher’s findings, to probably be manipulated by generative AI. The text said, “Crime from immigrants has gone up.” You get things like that where maybe someone could look at that image of this woman and be like, “That seems like a stock photo.” But if you have those fears or those views, and you’re seeing that image and you’re seeing that text, and a politician is saying, “Yeah, we are really concerned about immigrant crime.”

Leah Feiger: You’d be scared.

Vittoria Elliott: That can still be very emotional, because it may tap into something you already feel or believe.

Leah Feiger: Of course. Even with these dead politicians rising from the grave in India and Indonesia to speak to their electorate, people know they’re dead. People know that their old leaders are dead. But how lovely for them to make a comeback and say, “Please vote for XYZ person.” Even though you know it’s fake, there is some emotional pull, some resonance there.